SOTA Face Recognition Systems: How to Train Your AI

Overview: Face Recognition Market

The modern-day software to protect against fraud, track down criminals and confirm identities is among one of the 21st century’s most powerful tools, and it’s called face recognition.

In 2019, the face recognition market was estimated at over $3.6 billion. This number continues to grow, with a predicted $8.5 billion in revenue by 2025, with various governmental and commercial applications ranging from security and health to banking and retail, among thousands of others.

The Man-Machine Approach: Bledsoe’s Manual Measurements

The origin of face recognition stems back to the 1960s, when American Mathematician Woodrow, or “Woody” Bledsoe co-founded the organization Panoramic Research Incorporated, where he worked on a system specializing in the recognition of human facial patterns. During this time, Bledsoe experimented with several techniques. One of which was called the ‘Man-Machine’ Approach in which a bit of human help was also part of the equation.

Using a RAND tablet (often referred to as the iPad’s Predecessor), working with hundreds of photographs, he manually noted multiple facial feature coordinates onto a grid using a stylist. From there, he transferred his culminated data to his self-programmed database. When new photos were introduced with the same individuals, the system was able to correctly retrieve the photo from the database that most closely matched the new face.

Sounds like he would have described his career as picture-perfect 📷

Although one of his last projects was to assist law enforcement in finding criminal profile matches based on their mugshots, the technology’s legacy was far from over. Soon after, commercial applications sky-rocketed, with other scientists eliminating the ‘Man-Machine’ approach. One such example was Japanese computer scientist Takeo Kanande who created a program that could auto-extract facial features with zero human input, using a bank of digitized images from the 1970s World Fair.

Modern Face Recognition: Commercial Uses

Nowadays face recognition systems are widely used for commercial use. Although the technology’s usage has a reputation for its potentially intrusive nature, it is also making way for increased national and international security along with advanced asset management functionalities.

Here are some examples:

Mobile Phone Industry: Perhaps the most well-known example of face recognition technology is something most of us use every day- our cell phones. Apple’s Face ID was introduced in 2017 with the launch of iPhone X. Face ID provides a secure way to authenticate an iPhone user’s identity using face recognition. This technology allows individuals to unlock their phones, make purchases and sign into apps securely. By using depth map comparisons against a face (using an infrared sensor) Apple added an extra layer of security- ensuring that a person’s identity can not be faked (i.e. holding up a printed picture with that face).

Banking Industry: With high levels of fraud related to ATM cash withdrawals, some large banking corporations such as the National Australian Bank are investing in research related to client ID security. In its initial experiments, the bank integrated Microsoft’s ‘Window’s Hello’ facial recognition technology to a test ATM setup, in which it used a face scanner to verify a client’s identity.

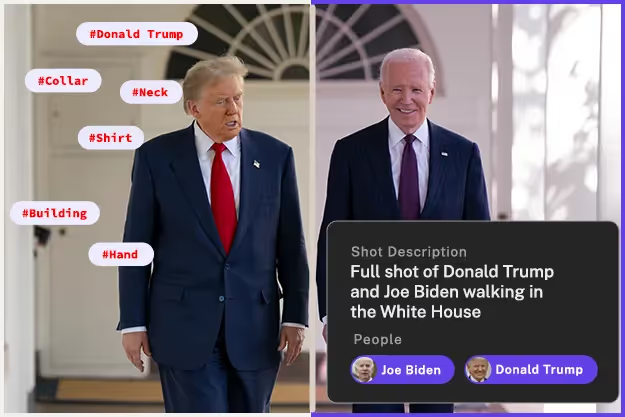

Sports & Media Rights Holders: Many rights-holders are using face recognition to work smarter and more quickly with their media assets. For example, the French Federation of Rugby (FFR) adopted Moments Lab ’s media asset management system with built-in face recognition functionalities, among others, that automatically analyzes internal content, detecting certain players, objects or logos among voluminous hours of raw video footage or photos.

Hotel Industry: Checking in is now easier than ever at certain Marriott hotels in China thanks to a recent face recognition software partnership with Alibaba, Chinese tech giant. Upon entry, guests approach face recognition kiosks which allow them to scan their faces and confirm their identities along with auto check-in. The initiative is awaiting global roll-out based on successful assessment.

Newsrooms: Many newsrooms, or should we say ‘news labs’ have broken down the science of retrieving the perfect news extracts for a story, in record-breaking time. When creating news segments, newsroom editors and producers are tasked with delivering the perfect shots, which they can usually search for in their media asset management systems. When throwing face recognition into the mix, things get a whole lot quicker…and profitable.

Security & Law Enforcement: Combatting crime and terrorism an image at a time! One example relates to face biometrics, which is used when cross-checking and issuing identification documents. The same technology is increasingly being used at border checks, such as Paris-Charles de Gaulle airport’s PARAFE system, which is using face recognition to compare an individual’s face with their passport photo.

Limitations of Traditional Face Recognition Systems

Face recognition systems have the potential to be extremely effective tools, but even the most powerful systems have limitations for users, such as:

Database Aggregation: Usually, face recognition systems have pre-existing datasets of legally derived faces based on known profiles from partner providers such as Microsoft Azure and IBM Watson. Depending on the use case, newsrooms for example, users are working with internal audiovisual assets (photos/videos) and need a system they can train to recognize unknown individuals throughout thousands of hours of audiovisual content. Essentially, they need to recreate a dataset of known individuals…which requires some human help.

Facial Occlusions: Multiple challenges exist in recognizing facial features when an individual’s face is partially obscured (i.e. wearing a scarf, mask, hand over mouth, or side profile). Because this occlusion distorts features and calculated alignments that are usually picked up by the facial recognition system to generate correct identity, the result is usually no recognition or incorrect recognition.

False Positives: Many face recognition systems can not provide a high level of accuracy for identification of individuals. For example, if there are 2 profiles that look alike and share multiple face features, sometimes a machine will detect the incorrect profile.

The Good news? Face Recognition systems have evolved, and all of these challenges can be solved using a Man-Machine approach along with Multimodal AI (using cross-analysis of face, object and speech detection to increase the confidence score of the recognized individual’s identity).

Your Machine-based Technology is only as good as your Man-made Thesaurus

It’s important to note that there is no standard when it comes to a shared Thesaurus. Many companies rely on pre-existing datasets, but these can be limiting as users can not alter them for their custom needs (i.e. adding additional profiles). When building a custom database of unknown faces, it’s crucial to invest in the man-made creation of your digital Thesaurus.

For reference, in regard to information retrieval, a Thesaurus is:

“A form of controlled vocabulary that seeks to dictate semantic manifestations of metadata in the indexing of content objects. A thesaurus serves to minimise semantic ambiguity by ensuring uniformity and consistency in the storage and retrieval of the manifestations of content objects.” – Source

Simply put, in the context of face recognition, a Thesaurus is a database or list of known individuals which acts as a reference point to detect the same faces among thousands of assets which may include these individuals’ faces.

A thesaurus is composed of more than strings and photos: each entity is assigned to several identifiers as references. For example at Moments Lab, for most of our customers we provide at least three identifiers:

1) An internal unique id

2) The customers’ entity legacy id

3) A Wikidata identifier (we consider it at a universal id)

Using these identifier types adds more practical sense (and is the only way to leverage semantic search and analysis). It’s also the best way to ensure machines can talk to other machines. For example, if you have a Wikidata identifier assigned to an entity it’s making it highly compliant when moved or shared with other systems.

So if your custom Thesaurus is properly completed, the machine should be able to identify your list of individuals among your ingested audiovisual content.

Example: Training Your Own AI via a MAM Platform with built-in face recognition

It’s long been said that machines can’t do everything autonomously. And maybe if we circle back to Bledsoe’s ‘Man-Machine’ Approach we can see that he was onto something…

When Bledsoe was inputting manually derived faceprints into his database, he was essentially training his machine, because he had to create a non-existent database. But today, what if end-users were able to train their face recognition systems too, without any coding experience?

This is where the Man-Machine Approach resurfaces…with the addition of Multimodal AI.

If you’re a Media Manager working with thousands of internal media assets that feature unknown individuals who would not usually be recognized by a pre-existing dataset, then there should be an easy way to train your machine to recognize faces among your assets, without needing to be a skilled programmer.

Taking a media valorization platform as an example, once users upload all of their media assets (photos, videos, audio files), the system automatically tags and indexes files based on multimodal AI.

For the faces that it does not know, an end-user has the ability to manually update their Thesaurus list by uploading 3 photos of each individual who they would like the machine to recognize among their thousands of assets. Once this is completed, the system will recognize these once ‘unknowns’. Via built-in face recognition technology, all individuals will be searchable via a semantic search bar.

By using this Man-Machine approach within an AI-powered media valorization platform with built-in face recognition, recognition capabilities and accuracy increase.

Now that’s what we call SOTA face recognition!

.png)