5 Lessons Learnt from Deploying Agentic AI in Video Workflows

We've indexed millions of video hours for broadcasters, production companies, sports organizations, and brands. When we released the Discovery Agent six months ago, we thought we understood how teams would use it.

We were half right.

The patterns that emerged from analyzing 11,000 real-world prompts taught us more than any pilot program could. Here's what actually happens when agentic AI meets video workflows.

1. Context is the difference between results and relevance

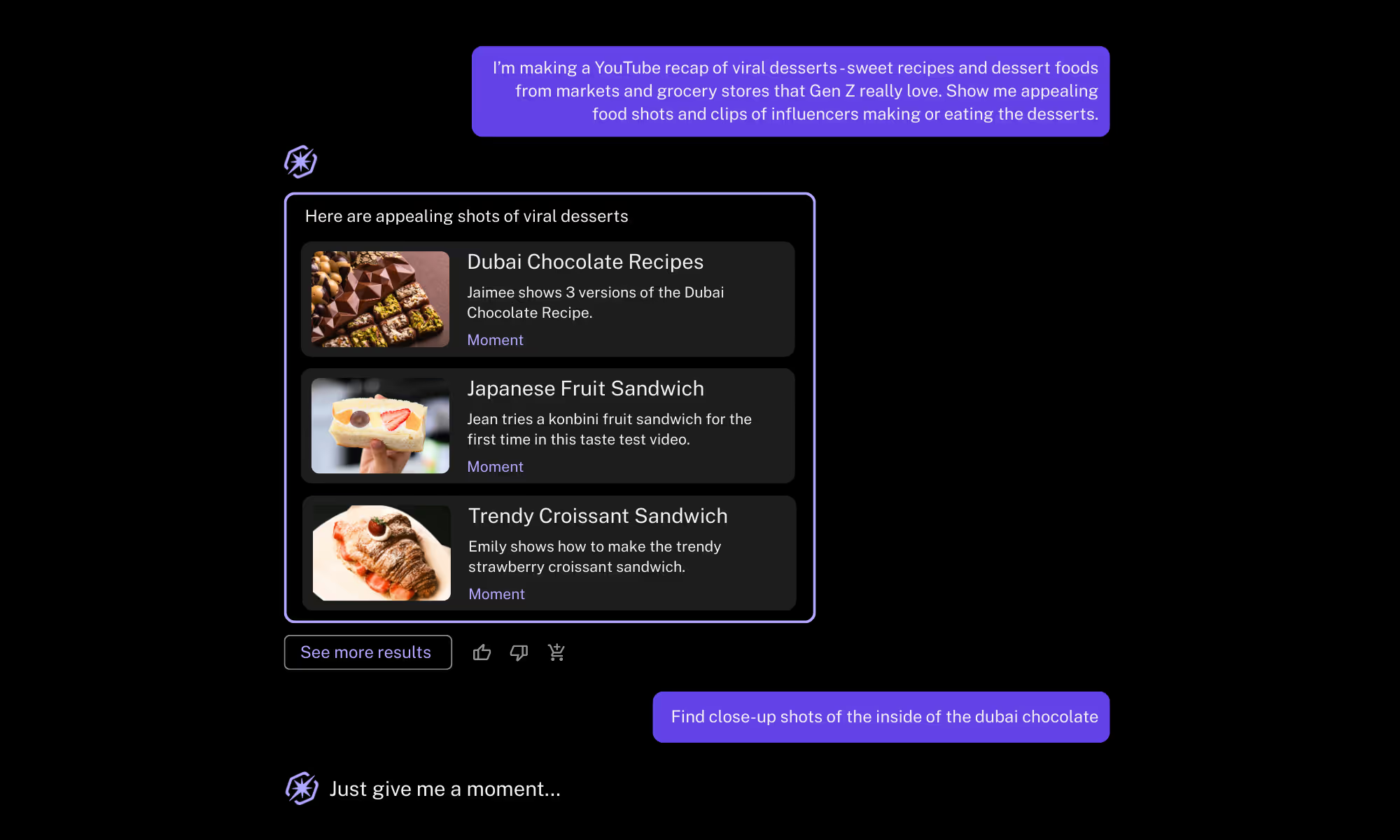

The first pattern appeared within days: people who are prompting to search for moments are just using keywords, akin to the way they’d search using Google.

A user at a production company behind an iconic cooking show types: "Find donuts."

The agent returns clips of donuts. Technically correct. Practically useless.

Now watch what happens with context:

"I'm creating a 10-minute YouTube story for Gen Z about the latest food trends. Can you find visually appealing donut shots from my show that could illustrate popular culture and social media aesthetics?"

Same request. Completely different results. The agent now understands the story, audience, platform, and narrative purpose. It surfaces moments that work for that specific creative vision.

This mirrors how you'd brief a human researcher. You wouldn't say "find donuts" to a colleague—you'd explain the project. Agents work the same way.

We addressed this limitation in human search behaviour with some contextual engineering, and by implementing reasoning capabilities. Before searching, the agent queries external sources to build understanding. Mention "inflation" and it might pull context from recent news about food prices, then match that understanding against your archive.

Each Moments Lab workspace can be configured to set specific context and provide background on how it will be used for the benefit of the agent. For example, editorial standards vary wildly between organizations. A production company operates differently to a news broadcaster or a sports brand.

Agent customization enforces an organization’s specific terminology and tone, accuracy standards, and content guidelines. When an agent already knows how to interpret an organization’s specific phrases and vocabulary, it eliminates the need for a user to recap who they are and what they do.

2. You need agents to understand agents

Quality assurance breaks down fast at scale.

Thousands of conversations happened in month one of our agent launch. No product manager can analyze that volume to assess whether the agent is improving.

Thumbs up/down feedback? Most users ignore it.

So we built an agent to evaluate our agent.

Every prompt-response pair runs through LLM-as-a-judge methodology, scoring interactions. We track performance curves, identify outliers, and route edge cases to humans.

3. Live TV proves multi-agent coordination works

The IBC 2025 Accelerator project took agentic AI into broadcasting's most demanding environment: live television.

Twelve teams—ITN, BBC, Channel 4, plus partners including ourselves, Cuez, Google Cloud, and EVS—collaborated to build AI assistants for control room workflows. The scenario: one operator managing an entire newsroom using only voice commands.

Here's a short example of what happened:

The operator says: "I'm missing a clip in this segment. Can you find something relevant and blur any faces?"

An orchestrator agent (Google/Gemini) routes this request:

1. Queries the rundown agent: "What's this segment about?"

2. Asks the Discovery Agent (Moments Lab): "Find footage matching this topic."

3. Sends the selected clip to the Blurring agent (EVS): "Blur faces in this video."

4. Returns the processed, broadcast-ready clip.

No one coded explicit integrations between these systems. Each agent received a natural language "job description" of its capabilities. The orchestrator read those descriptions and figured out the workflow.

This runs on A2A (Agent to Agent) protocol, the open standard Google released in April 2025 with backing from 50+ companies including Salesforce, SAP, ServiceNow, and MongoDB. When Microsoft adopted it three weeks later, it signaled genuine industry alignment (historically rare).

A2A enables horizontal agent-to-agent communication using HTTP and JSON messaging. It complements MCP (Model Context Protocol), which handles vertical agent-to-tool connections. Together, they're creating an ecosystem where agents from different vendors collaborate without custom integrations.

The vision? You'll start work in Slack or Teams with a request: "I need a two-minute highlight reel from yesterday's game featuring the goalie's best saves." The orchestrator routes to specialist agents, coordinates the work, and delivers results to your preferred editing interface, be it Avid Media Composer, Adobe Premiere Pro, Da Vinci, wherever you actually work.

4. How you search shapes what you create

Here's a pattern we noticed with a Middle Eastern broadcaster:

When covering economic stories, editors consistently pulled the same footage: a specific market scene, the same vendor, identical shots. Not because it was the best content, but because it was easy to find in their legacy system.

This reveals something important about the relationship between search tools and creativity.

When search relies on perfect metadata and pre-indexed keywords, you get repetitive storytelling. The system rewards using what's easy to retrieve, not what's narratively interesting.

But when you can prompt: "Show me unexpected reactions to price increases in local markets," you access moments that were technically in your archive but functionally invisible.

In France, inflation stories default to "the baguette index" which are shots of someone buying a baguette, used endlessly because that footage is tagged and findable. Agentic search lets you break that pattern: "Find moments where everyday people discuss the cost of living in casual settings, not formal interviews."

Search method determines narrative possibility. Better search unlocks better storytelling.

5. Organizational strategy matters more than technology

We've deployed agents internally across Moments Lab: 60+ people using them in Slack, support workflows, and for team-specific tasks. At our scale, adoption is straightforward.

But 85% of AI initiatives fail according to Gartner, not because of technical problems, but organizational ones. McKinsey's 2025 research found that while 62% of organizations experiment with agents, only 39% see enterprise-level business impact.

The gap? Change management, governance, and strategy.

At enterprise scale, you need frameworks: which agents can access what data, where humans must be in the loop, particularly for approvals, and how agents are permitted to interact. Think of it like an org chart, but for AI systems—with the end user at the center rather than a CEO at the top.

Agent strategy means scoping properly. Each agent should excel at four to five specific tasks. Don't build one agent that handles search, compliance, audience analytics, and content generation. Build specialists. Use an orchestrator to route requests.

Success factors from high-performing organizations:

- Leadership treats AI as long-term strategic priority, not a pilot program

- Teams redesign workflows around AI capabilities rather than bolting AI onto existing processes

- Organizations foster psychological safety so people feel comfortable experimenting and learning

- Clear governance establishes permissions, audit trails, and human-in-the-loop checkpoints.

Start small. Deploy agents for specific, well-defined tasks where they will make an impact and/or reduce a friction point for users. This will drive user buy-in. Learn from real usage. Expand deliberately.

Quality data is a non-negotiable

If nothing else, remember: everything runs on quality indexed data, especially when it comes to successful video search, retrieval or discovery. Without proper video understanding—shots, sequences, vector embeddings, natural language descriptions—agents have nothing to retrieve. The indexing layer, like that provided by MXT multimodal AI, fuels the entire system.

Agentic AI in video workflows is still in its infancy. Users will surprise you with unexpected requests. Systems will need tuning. But the organizations moving now are defining the patterns that will shape the industry for years.

The question isn't whether agents will transform video production. It's whether you'll help shape how that transformation happens.

2026 is the year when organizations that deploy production-ready, agentic AI systems (not pilot programs) are poised to win.

Watch on-demand

Get the playbook for building your own agentic AI strategy in our webinar replay: Agentic AI for Video: Lessons from Early Deployments.

Want to know more about how Moments Lab can help your teams spend less time searching and more time creating value? Book a demo with us.

Take the time to customize your agent, and then train your teams to prompt like they're briefing a smart assistant, not querying a database.

.png)