Release Note: Introducing OCR

What’s New at Newsbridge: Optical Character Recognition (OCR)

This latest release was driven by customer feedback, especially from a newsroom perspective in which journalists need to automatically index and search for text within background signage (i.e. rolling banners, logos and names on clothing, signage, etc.).

When applied to our pre-existing signature Multimodal Indexing AI technology, OCR (Optical Character recognition) adds on another layer of enriched metadata which leads to more advanced metadata tagging. In turn, this supercharges users' semantic search and asset retrieval experience, promoting what journalists do best...in depth investigative research! 🔎

Whether it’s analyzing a newly emerging, complex news story with sparse spoken information regarding geo location, context or personalities- OCR is helping our customers get the most out of their content, leveraging text within images among video assets or still images.

Example: OCR detects unknown sports players and brand sponsors

When it comes to pulling together a recap in seconds, especially in the fast-paced world of sports, Newsbridge’s OCR feature is helping make the once unsearchable, searchable!

For example, in the extract below, we see a tennis player with his back to the camera, but panel signage in the background is giving us helpful hints! If you were a user working on a recap for this game and associated content indexing, it would be extremely helpful to have all players’ names plus any contextual information available to help speed up post-production workflows.

Thanks to OCR, we can see that the players’ names are extracted from the detected signage. This information is then added on as metadata to the ingested content, allowing for advanced search and retrieval of specific moments in the game.

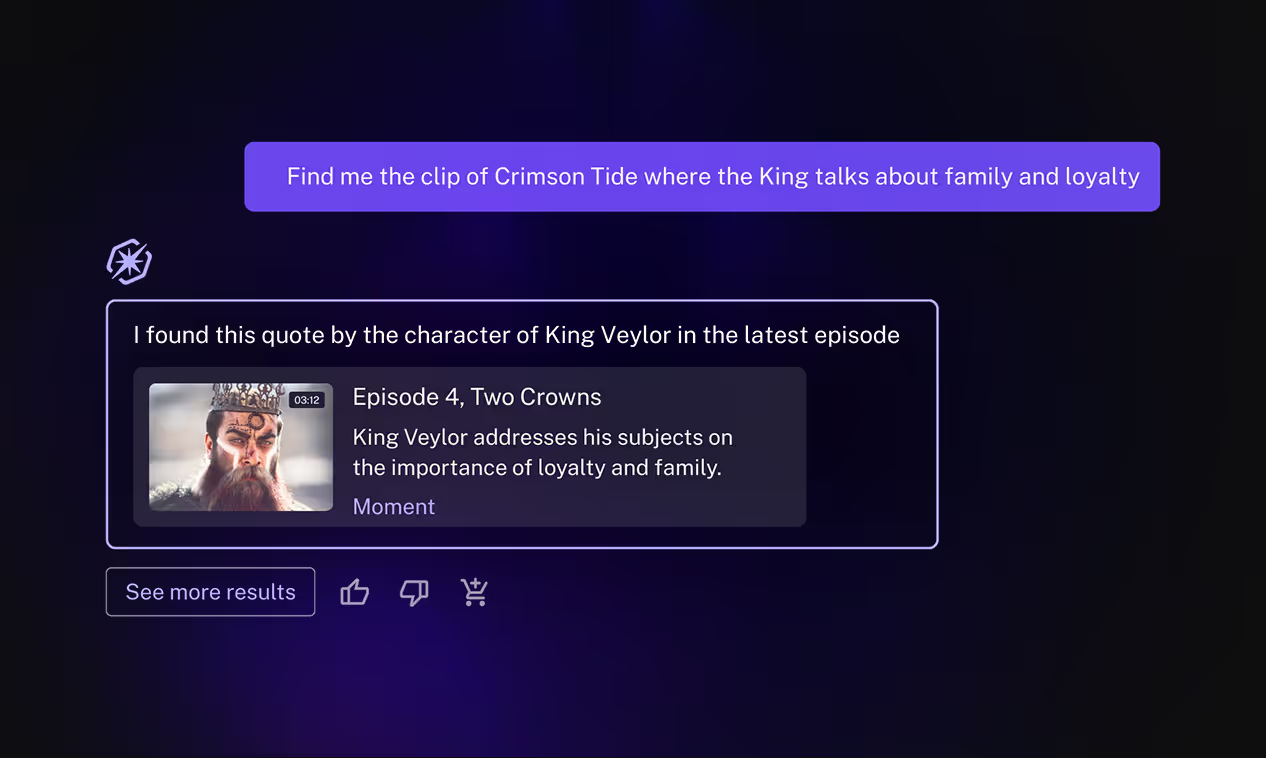

Step-by-Step: Here’s how it works in the platform

1. Here we see an emerging live-streamed video clip with little spoken information or context provided.

2. To access OCR capabilities, simply navigate to the ‘annotations’ icon on the navigational bar of your corresponding video clip.

3. From here, a right-hand panel will appear which will give you the option to select OCR as a detection method. This will list off all detected text within video images, giving you a list of annotations and references. Looking at the example below we are able to pick up quickly that this is a tour of a high-end shopping district from OCR detected brand names like Cartier, Louis Vuitton...

4. This entire OCR detected list of keywords is then searchable as annotations within Newsbridge’s semantic search bar, allowing users to find exact moments- even when they are not detectable by leveraging traditional speech-to-text or facial recognition technologies.

This new feature is a wonderful tool for journalists, sports rights holders, event organizers and more to further enhance the Investigative Research experience of connecting the ‘semantic dots’. Especially when it comes to emerging stories with a small amount of spoken reference or facial recognition (i.e. backs turned from the camera, lesser known personalities, etc.), or when assisting with archive asset management and semantic search.

About Moments Lab

Moments Lab (ex Newsbridge) is a cloud media hub platform for live & archived content.

Powered by Multimodal Indexing AI and a data driven indexing approach, Moments Lab provides unprecedented access to content by automatically detecting faces, objects, logos, written texts, audio transcripts and semantic context.

Whether it be for managing and accessing live recordings, clipping highlights, future friendly archiving, content retrieval or content showcasing and monetization - the solution allows for smart & efficient media asset management.

Today our platform is used by worldwide TV Channels, Press Agencies, Sports Rights Holders, Production Houses, Journalists, Editors and Archivists to boost their production workflow and media ROI.

.png)