What Is AI Video Discovery? An Updated Guide for 2026

Article overview:

- Better searchability: AI video discovery makes it possible to search video content by what's actually in it, not just metadata attached to files

- Simultaneous processing: Multimodal AI can process visual, audio, and textual content together, understanding relationships between what's seen and heard rather than analyzing each signal in isolation

- Build the business case: Benefits of using AI in video discovery systems include time savings, improved accuracy, better scalability, and new potential revenue streams from unlocked archives

The average enterprise now manages many petabytes—that’s quadrillions of bytes—of data, much of it sitting in cloud storage systems. Yet 80% of it goes largely untouched because no one can find what they need. Even with the best intentions of documented processes and ISO compliance, it’s still likely workers will be interacting with and saving data, including media assets, in different ways, with different metadata, in different folder structures.

And with cloud spending set to rise beyond $980 billion this year, all of those media assets need to start working harder for the business. How can you harness the power of cloud, multimodal technology and AI to access and monetize your production asset management?

AI video discovery has entered the room.

What Is AI video discovery?

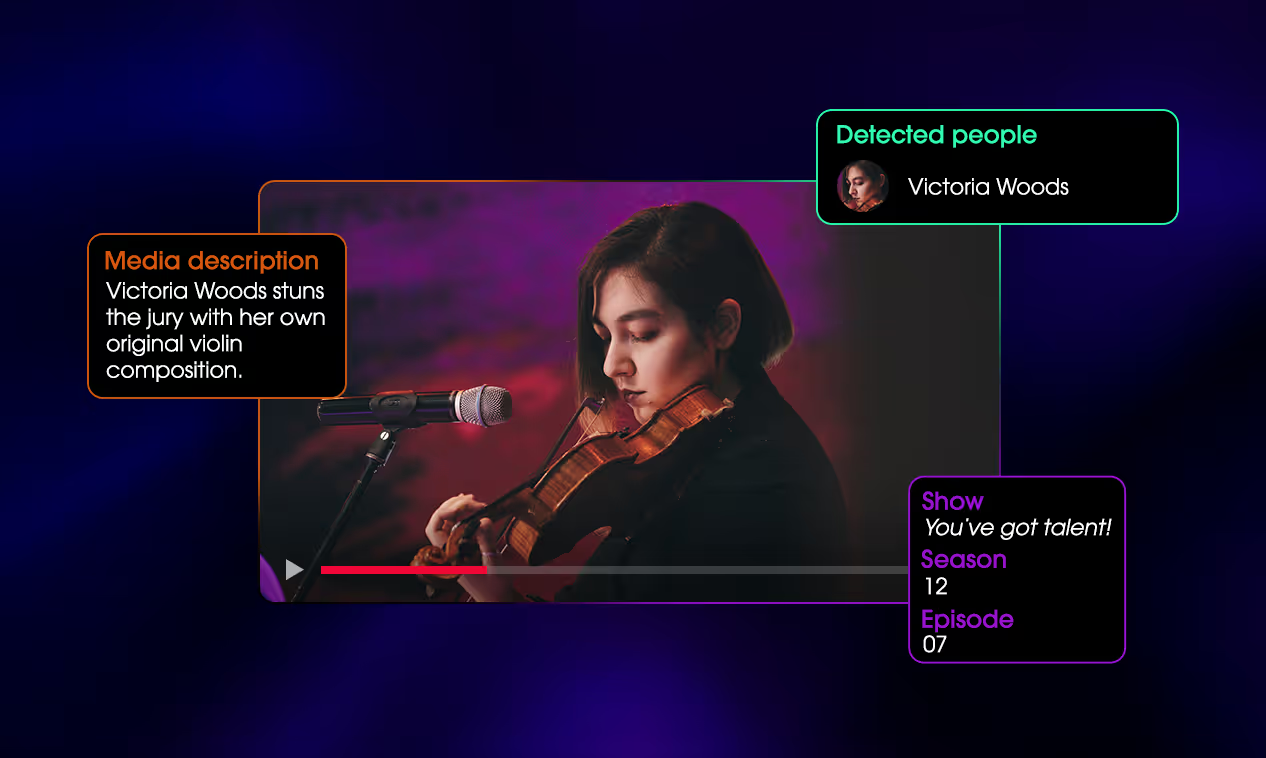

AI video discovery refers to the use of artificial intelligence to automatically analyze, index, and retrieve video content based on what's actually happening within the footage—not just the metadata attached to it. Unlike traditional search that relies on manually assigned tags and filenames, AI video discovery understands individual visual elements, spoken words, on-screen text, sounds, and the relationships between them.

This multimodal AI technology transforms video from an opaque, time-consuming format into masses of searchable, queryable data. A user can type "person in blue jacket walking through a crowd" and surface the exact frame needed from a three-hour recording—without anyone having manually logged that specific moment.

How AI video discovery works

Modern AI video discovery systems combine multiple technologies working in concert to understand video content at a deep level. Let’s take a look at the key components driving AI video discovery platforms in 2026.

Multimodal AI

Video is inherently multimodal. A single clip contains visual frames, audio tracks, speech, music, and on-screen text, as well as the temporal relationships between all of these elements.

Unfortunately, early AI systems could only process these signals separately. They would analyze images with one model, transcribe speech with another, and be left hoping keyword overlap would connect relevant results.

Current multimodal AI models, though, can process all of these signals simultaneously, creating unified representations that not only capture the specific elements, but also how visuals and audio relate to each other. Rather than embedding individual frames and storing image and text vectors separately, production-ready multimodal embedding models can produce dense vector representations of entire scenes.

This approach is able to capture context that single-modality analysis misses; for example, it knows a crowd cheering means something different during a sports victory than during a protest march.

Automatic metadata generation

Traditional video workflows required human operators to watch footage and manually log every relevant detail—a process that could take three to five times the video's runtime. AI-powered metadata generation inverts this equation.

Modern AI video discovery systems can automatically extract and tag:

- Visual elements, such as objects, people, faces, logos, scenes, actions, colors, and compositions

- Audio content, such as speech transcription, speaker identification, music detection, and sound classification

- Text recognition, such as on-screen graphics, lower thirds, signage, and documents visible in frame

- Temporal markers, such as scene boundaries, shot changes, and significant moments

This automation capability can transform hours of manual logging into mere minutes of processing time. The resulting metadata is often richer and more consistent than human-generated tags, too, as they model can capture details that a fatigued operator might overlook after hours in front of a screen reviewing footage.

Semantic search

Semantic search represents a fundamental shift from pure keyword matching to seeking matches of meaning.

Traditional search required users to guess the exact terms someone used when tagging content. If a clip was tagged "automobile" but you searched "car," you'd miss it.

Semantic search, on the other hand, understands that "car," "automobile," "vehicle," and "sedan" share meaning. More importantly, it understands natural language queries—that is, how humans normally speak. Instead of constructing boolean searches with precise keywords, users can instead conversationally describe what they're looking for: "executive giving a presentation with charts visible behind them" or "outdoor footage shot at sunset."

The technology uses something called vector embeddings—which convert aspects of the media asset into statistical representations—to represent both content and queries in a shared mathematical space where similar meanings cluster together. This enables the user to discover relevant content even when there's no literal keyword overlap between the query and the metadata.

AI agents and conversational AI

The frontier of AI video discovery is moving beyond single-query searches towards agentic systems that can reason about video content across multiple steps. These AI agents don't just retrieve clips; they analyze, compare, and synthesize information from entire video libraries.

Agentic approaches use tool-equipped AI to navigate videos, iteratively refining searches based on intermediate results. A user might ask, "find all instances where the CEO contradicted the Q2 guidance," and on pre-indexed content, the agent would understand company context, search for the CEO across different appearances, speech transcriptions, and flag potential contradictions—a task that would take a human hours of scouring through archival footage.

Conversational interfaces allow users to refine searches through dialogue. Initial results can be narrowed ("just the outdoor shots"), expanded ("include similar moments from other events"), or pivoted entirely ("actually, show me the audience reactions instead") without starting over.

The evolution of video discovery

To get us to this point, video discovery has progressed through distinct technological eras, each expanding what's possible.

The hands-on manual era

Before AI, video discovery meant human logging. Archivists and librarians watched footage in real-time, creating written descriptions and timecodes. Large organizations employed teams of loggers working in shifts. The process was expensive, slow, and inevitably inconsistent as different operators would describe identical content differently.

Any search meant querying these human-written descriptions, so discoverability depended entirely on whether a logger happened to note the specific detail you needed. Valuable footage remained buried simply because no one had thought to tag the element that would later become relevant.

The early impact of AI

The first wave of AI video analysis focused on discrete, well-defined tasks: face detection, speech-to-text transcription, optical character recognition. These systems analyzed each modality independently and produced structured outputs that could be searched.

While useful, early AI had significant limitations. Face detection could find faces but not identify who they belonged to without extensive training. Speech transcription worked poorly with accents, background noise, or domain-specific vocabulary. Results required significant human cleanup, and the systems couldn't understand relationships between what they detected.

Into the multimodal era

The systems we now use can process video holistically, understanding the interplay between visual and audio content. Large vision-language models can describe what's happening in a scene, not just list the objects present. They understand actions ("person signing a document"), relationships ("crowd surrounding the speaker"), and even abstract concepts ("tense negotiation").

This era also brought semantic search to media assets, enabling natural language queries that match intent rather than keywords. Processing speeds increased dramatically; many systems now analyze video faster than real-time, making it more practical to index content as it's captured rather than in batch processes days later.

The emergence of agentic AI for video discovery

Emerging agentic systems add reasoning and multi-step problem solving to video discovery platforms. Rather than returning search results for users to review, agents can be tasked with complex objectives and will autonomously determine what queries to run, what results to analyze, and how to synthesize findings.

These systems can be particularly powerful for long-form video understanding. While traditional search works well for finding specific moments, AI agents can answer questions that require understanding content across an entire video library—identifying patterns, tracking changes over time, or finding connections humans wouldn't think to search for.

AI video discovery vs traditional search

Traditional video search and AI-powered discovery differ fundamentally in what they can find and how users interact with them.

Traditional search operates on metadata, which is information about video files rather than the content within them. Users must know (or guess) the terminology used during tagging, construct precise queries, and manually review results to verify relevance. The approach works adequately when metadata is comprehensive and consistent, but fails when users don't know the right keywords or when important content was never properly tagged.

AI video discovery operates on the content itself. The system can understand what's visible and audible in footage, enabling searches that would be impossible with metadata alone. Users describe what they're looking for in plain conversational language, and the system seeks relevant moments even if video was never explicitly tagged with those details.

Key features to look for in AI video discovery technology

When evaluating AI video discovery solutions, certain capabilities can separate effective systems from limited ones. Make sure you’re evaluating platforms on the following features.

Deep content understanding

The system should comprehend video on multiple levels, such as identifying objects and faces, understanding actions and events, recognizing text and logos, and grasping the overall context of scenes. Surface-level detection ("there's a person") matters less than meaningful understanding ("executive presenting quarterly results to board members").

Natural language queries

Don’t make your users learn specialized query syntax or vocabulary. The most effective search systems can accept the same language people use when describing what they're looking for, handling variations in phrasing and terminology gracefully.

Temporal precision

Video discovery should return specific moments, not just files. The ability to jump directly to the relevant 30 seconds within a four-hour recording transforms the utility of search results.

Multi-format support

Real-world video libraries contain footage from various sources: broadcast feeds, phone recordings, professional cameras, screen captures, archival formats. Robust systems can handle this diversity without requiring format conversion or separate processing pipelines.

Integration capabilities

AI discovery should be able to connect and integrate with existing media asset management systems, editing tools, and distribution platforms. Isolated discovery that requires manual export and re-import of findings creates friction that is likely to reduce adoption.

Processing scale and speed

Consider both throughput (how much content can be processed daily) and latency (how quickly newly ingested content becomes searchable). For time-sensitive applications like news broadcasts, near-real-time indexing is essential.

Accuracy controls

The best systems provide confidence scores and allow users to set precision thresholds based on their tolerance for false positives versus missed results. Some workflows need every possible match; others demand only high-confidence results for timeliness.

The potential impact of AI in video discovery

AI video discovery can deliver measurable advantages across multiple dimensions. Build your business case for long-term investment by assessing potential benefits including time savings, improved accuracy, scalability, and reduced costs.

- Time-saving: The most immediate impact can be a dramatic reduction in time spent finding footage. Tasks that previously required hours of manual review can compress to seconds of search; organizations report a reduction of 70-90 percent in content discovery time after implementing AI systems.

- Accuracy: AI systems are able to find content that human search would likely miss. They don't forget to check certain folders, don't get fatigued after hours of review, and don't have blind spots based on individual knowledge gaps. Semantic search helps to surface relevant content even when it wasn't explicitly tagged, reducing the "I know we have footage of that somewhere" frustration.

- Scalability: Manual logging costs correspond with content volume—twice as much footage requires twice as many logging hours. AI video processing scales differently. Once the infrastructure is in place, the marginal costs per hour of video can decrease. Organizations can finally process their entire archives rather than just prioritizing recent or high-value content.

- Cost reduction: Beyond direct labor savings, AI video discovery helps to create value by unlocking previously inaccessible content. Archived footage that sat unused because no one could find it becomes available for licensing, repurposing, and monetization.

AI video discovery: Use cases by industry

Different industries are leveraging AI video discovery for distinct workflows and objectives.

Speedy search powers newsrooms

Breaking news demands speed. When a story develops, producers need relevant archive footage immediately—historical context, previous statements from key figures, related incidents. AI discovery enables searches like "protests at this location in the past five years" or "previous interviews with this official" that would be near-impossible under deadline pressure with traditional search. Real-time indexing means footage from morning press conferences becomes searchable by the time of the afternoon broadcast.

Production companies maximize ROI

Entertainment and commercial production generates enormous footage volumes. A single film might produce 500 hours of raw footage to yield two hours of finished content. AI discovery helps editors find specific takes, locate B-roll matching particular moods or compositions, and identify usable moments across multiple shooting days.

The technology also supports better asset reuse. Footage shot for one project can be surfaced for others, maximizing return on production investment.

Unlocking new revenue for sports broadcasting

Sports broadcasting has unique discovery needs: finding specific plays, tracking individual athletes across games, identifying moments of peak excitement. AI video discovery platforms can distinguish a routine play from a highlight-worthy moment, automatically identifying goals, penalties, spectacular saves, and crowd reactions.

Rights holders can use AI video discovery to create highlight packages rapidly, personalize content for different audiences (focusing on specific teams or players), and identify licensable moments across massive game archives.

Streamlined workflows for brand marketing teams

Marketing teams manage growing libraries of brand content as they rack up commercials, social media clips, event footage, user-generated content, and more. Finding on-brand imagery for campaigns means searching not just by keyword but by visual style, mood, and brand guideline compliance.

AI video discovery enables searches like "outdoor footage with our product visible, positive emotional tone, summer lighting"—something that traditional search could not handle. Brand safety applications can also automatically flag content that might conflict with company guidelines.

Making archives more accessible

Cultural institutions, government agencies and media companies hold historical video archives representing irreplaceable documentation. Much of this content was never fully catalogued—or was catalogued decades ago using standards and terminology that don't match modern search needs.

AI video processing can make these archives more accessible without requiring a complete re-cataloguing project. Users can search visual content directly, discovering materials that were always there but were difficult to surface using existing metadata.

Limitations and challenges of AI video discovery

While it’s powerful, AI video discovery does come with constraints that users should understand, including these:

- Processing demands

- Video analysis requires significant computational resources.

- Processing hour-long videos, especially at high resolution, can be time-consuming and expensive.

- Real-time analysis remains challenging for complex AI models.

- Accuracy limitations

- No system achieves 100% accuracy.

- False positives (irrelevant results) and false negatives (missed relevant content) occur, particularly with unusual content, poor quality footage, or queries involving subtle or subjective characteristics.

- Current systems can struggle with information-dense, hour-long videos where context spans extended timeframes.

- Bias concerns

- AI models can inherit biases from their training data.

- Systems may perform differently across demographics, cultural contexts, or content types depending on what they were trained to recognize.

- Representation bias in training data can lead to lower accuracy for underrepresented groups.

- Context gaps

- While AI understands what's visible and audible, it may miss context that requires external knowledge.

- A system might identify a person without recognizing their significance, or detect an event without understanding its historical importance.

- Integration complexity

- Implementing AI discovery often requires infrastructure changes, workflow adjustments, and staff training.

- The technology delivers value only if people actually use it, which requires thoughtful change management.

- Privacy and rights

- Automated face recognition and content analysis raises privacy considerations.

- Organizations must navigate consent requirements, data protection regulations, and ethical guidelines around automated surveillance capabilities.

The future of AI video discovery

Technology has always moved fast, and AI can feel like it’s moving even faster. In media and production asset management, multimodal AI and AI agents are set to continue reshaping how organizations interact with their media archives. Keep an eye out for these trends in 2026 and beyond.

Semantic search foundation

Semantic understanding looks set to become an expectation rather than a differentiator—every video platform will need to offer some form of natural language search. Users’ baseline expectation will shift from "can I search video content?" to "how well does the system understand nuanced queries?"

Improvements in multimodal foundation models will likely enable increasingly sophisticated understanding—recognizing not just what's visible, but why it matters, how it relates to other content, and what it implies.

Conversational refinement

Interactive, dialogue-based discovery could replace single-query search for complex information needs. Users want to hold conversations with AI systems, progressively refining what they're looking for based on initial results. These multimodal AI systems will be able to ask clarifying questions, suggest related content, and learn from user feedback within sessions.

This conversational approach helps to make video discovery accessible to users who don't know exactly what they're looking for, in turn enabling exploratory search that can surface unexpected yet valuable content.

Combining quick results with sophisticated analysis

The most effective systems will seek to blend semantic search for rapid retrieval with agentic reasoning for complex analysis. Simple queries ("show me the CEO interview") will be able to return instant results, while sophisticated requests ("analyze how our messaging about sustainability has evolved across all executive communications this year") will trigger agent-driven investigation.

These hybrid systems, such as MXT-2, will be able to handle the full spectrum of discovery needs—from quick lookups during editing to strategic analysis for planning—within unified interfaces, giving users more power to create and monetize content.

AI video discovery: Key takeaways

- AI video discovery makes it possible to search video content by what's actually in it, not just metadata attached to files—transforming video from an opaque format into queryable data.

- Multimodal AI can process visual, audio, and textual content together, understanding relationships between what's seen and heard rather than analyzing each signal in isolation.

- The technology has evolved from manual logging through single-modality AI to multimodal systems, with agentic AI representing the emerging frontier for complex video understanding.

- Benefits span time savings, improved accuracy, better scalability, and new potential revenue streams from unlocked archives.

- Industry applications range from breaking news production to sports highlights, brand content management, and cultural archive accessibility.

- Limitations include processing demands, accuracy constraints with complex content, potential bias, and privacy considerations that organizations must address in implementation.

- The future combines semantic search for rapid retrieval with conversational interfaces and agentic reasoning for increasingly sophisticated video discovery needs.

Ready to see if Moments Lab’s AI-powered video discovery platform is the right fit for you? Contact us for a demo.

AI video discovery: Frequently asked questions

What types of video can AI discovery analyze?

Modern systems can handle virtually any video format and source: broadcast recordings, raw camera footage, screen captures, phone videos, archival content, streaming media, and more. However, quality limitations apply; extremely low resolution or heavily degraded footage may yield reduced accuracy.

Does AI video discovery require manual setup for each video library?

No. Unlike traditional systems that require extensive tagging before content is searchable, AI discovery can process and index video automatically. However, accuracy can improve with domain-specific customization. Be sure to train systems to recognize particular faces, logos, or terminology relevant to specific organizations.

Can AI discover content that was never tagged?

Yes, this is one of the primary advantages of AI video search over traditional search techniques. AI analyzes actual video content, so it can find relevant moments regardless of whether anyone previously identified and tagged them. Archives that were minimally catalogued can become fully searchable once processed.

What about privacy and face recognition concerns?

Organizations must configure AI discovery systems in compliance with applicable privacy regulations and ethical guidelines, including GDPR and the Children's Online Privacy Protection Act (COPPA). Most platforms offer controls to disable face recognition, restrict certain analysis capabilities, or limit who can search for specific content types.

How quickly does new content become searchable?

Processing speeds vary by system and content complexity, but many platforms can now process video faster than real-time.

.png)